Google DeepMind: AlphaEvolve Paper Review

Today, I'd like to summarize the paper "AlphaEvolve: A coding agent for Scientific and Algorithmic Discovery" published by Google DeepMind in 2025.

AlphaEvolve: A Gemini-powered coding agent for designing advanced algorithms New AI agent evolves algorithms for math and practical applications in computing by combining the creativity of large language models with automated evaluators

This research introduces the AlphaEvolve system, which uses AI to improve algorithms and make scientific discoveries automatically.

Below is the English abstract from the original paper:

In this paper, we introduce AlphaEvolve, an evolutionary coding agent that significantly enhances the capabilities of state-of-the-art LLMs on highly challenging tasks such as solving open scientific problems or optimizing core computing infrastructure. AlphaEvolve constitutes an autonomous LLM pipeline that improves algorithms by directly modifying code. Through an evolutionary approach with continuous feedback from one or more evaluators, AlphaEvolve iteratively refines algorithms, potentially leading to novel scientific and practical discoveries. In this paper, we apply this approach to several important computing problems, demonstrating its broad applicability.

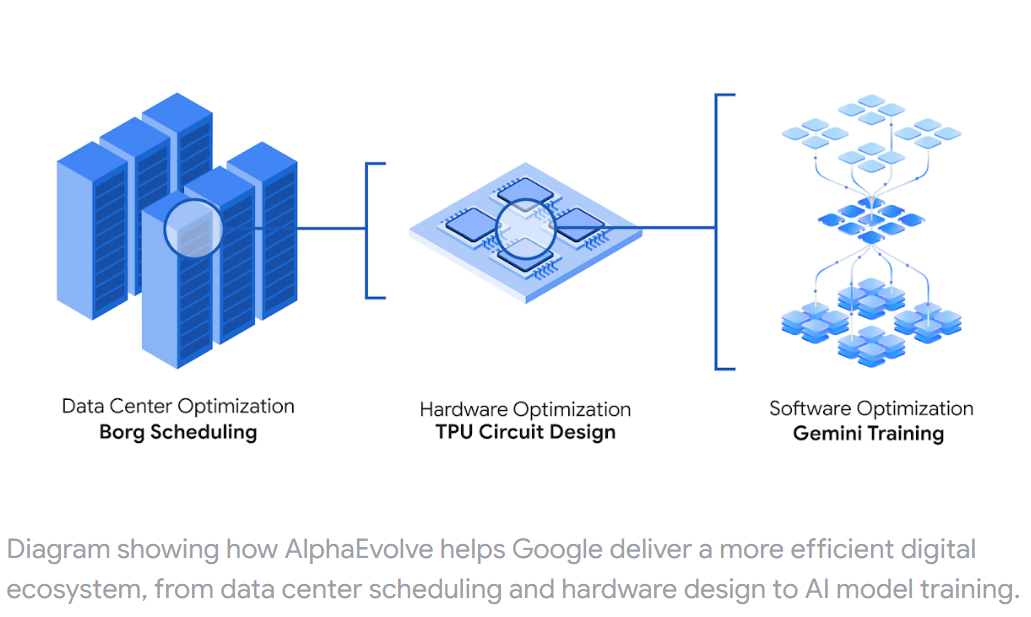

When applied to optimizing core components of Google's large-scale computing stack, AlphaEvolve developed more efficient scheduling algorithms for data centers, discovered functionally equivalent simplifications in hardware accelerator circuit design, and accelerated the training of the LLMs that underpin AlphaEvolve itself. Moreover, AlphaEvolve discovered novel, provably correct algorithms that outperform state-of-the-art solutions across various problems in mathematics and computer science, significantly extending the scope of existing automated search methods. Notably, AlphaEvolve developed a search algorithm that finds a procedure for multiplying two 4×4 complex matrices using 48 scalar multiplications—the first improvement over Strassen's algorithm in this setting in 56 years. We believe that AlphaEvolve and similar coding agents can have significant impact in enhancing problem-solving capabilities across multiple domains of science and computation.

What is AlphaEvolve?

Alphaville is a system that combines Large Language Models (LLMs) with evolutionary computation to automatically improve code. For example, it improved the 4×4 matrix multiplication algorithm for the first time in 56 years and optimized data center scheduling.

AlphaEvolve is a system where AI directly writes and improves code, combining two key technologies:

- Large Language Models (LLMs): AI models trained on vast amounts of code data that can generate new code or modify existing code.

- Evolutionary Computation: A method that mimics natural selection by generating multiple versions of code and selecting the best ones to pass on to the next generation.

The system scores code performance based on evaluation functions provided by users. For example, in matrix multiplication, the goal is to minimize the number of scalar multiplications.

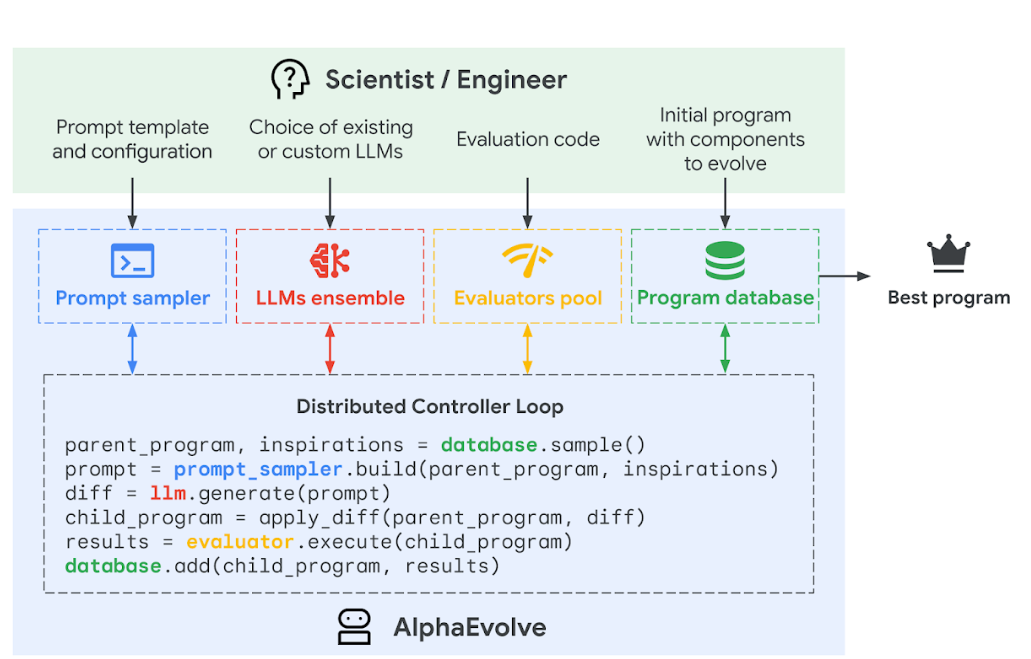

Key Components and How It Works

- Task Specification: Users provide initial code and evaluation functions. For example, in matrix multiplication, an evaluation function is set to minimize the number of scalar multiplications.

- Prompt Sampling: Previous solutions and problem descriptions are provided to the LLM to enrich the context content.

- Creative Generation: The LLM generates code modification suggestions, presented in "diff" format.

- Evaluation: New code is scored using evaluation functions, employing a cascade evaluation strategy (progressing from easy to difficult tests).

- Evolution: High-scoring code is stored in a database and used as the basis for generating the next generation. This uses evolutionary algorithms, utilizing MAP Elites and island-based models to balance exploration and exploitation.

- Distributed Pipeline: Performs work in parallel through asynchronous processing.

Achievements

1. Matrix Multiplication Optimization

- AlphaEvolve improved the 4×4 matrix multiplication algorithm for the first time in 56 years, reducing the number of scalar multiplications from 49 to 48.

- This is equivalent to implementing multiplication circuits with fewer logic gates in VLSI. Even small improvements can have significant effects in repetitive operations. For example, this is crucial for hardware accelerators like GPUs and TPUs.

2. Google Computing Optimization

- Reduced resource waste in data center scheduling.

- Also performed optimization of TPU circuits and attention mechanisms in Transformer models, directly related to hardware accelerator problems.

3. Mathematical Discoveries

- Found new bounds for mathematical constants such as kissing numbers and autoconvolution.

- This can help implement mathematical foundations used in signal processing and cryptographic algorithms more efficiently. For example, better mathematical algorithms can lead to more efficient hardware designs.